This post follows on from a previous blog post, which shares slides (1-5) from a webinar on "automated transcription for research purposes". This post shares slides 6-10, which include a demonstration of Otter.ai and some key useful features for researchers.

Please feel free to share this post and the images with reference to myself (Caitlin Hafferty). Do get in

touch with any comments/questions at: caitlinhafferty@connect.glos.ac.uk

and follow me on Twitter for updates (@CaitlinHafferty).

|

| Slide 6 - search in the text |

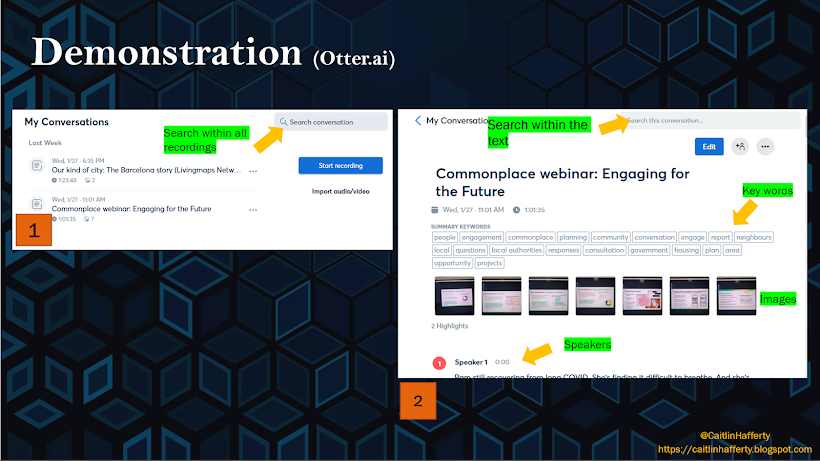

Slide 8 shows how you can search for words/phrases within all of your recordings (e.g. for a particular key word, name, sentence, etc.) and within each individual recording.

The app will then highlight relevant words for you, so you can easily

select what you are interested in (shown again in slide 10).

|

| Slide 7 - keyboard shortcuts and highlights |

Slide 7 - the picture on the left (3) shows all the keyboard shortcuts you can use when navigating your recording and transcript. There are shortcuts for audio and transcript editing, including slowing down and speeding up the audio (useful for speeding over a chunk of irrelevant audio, or slowing down someone who is speaking quickly), inserting paragraph breaks, undo/redo an action, etc. The picture on the right (4) shows the highlights you can create when reading through your transcript/listening to the audio. To do this, you literally highlight the text you're interested in (click and drag). Otter.ai will then store these highlights, which you can view together as shown, or click on the highlight and it will navigate you to its place in the transcript.

|

| Slide 8 - keywords, speaker assignment, and word clouds |

The pictures in slide 8 demonstrate how the transcripts (of webinars, interviews, meetings, etc.) show up on the screen. This includes the title, duration, summary key words, speaker assignment, percentage (%) time each speaker talks for, embedded photos, and highlights.

Two features are worth highlighting here - percentage speaker time and word clouds. I think the % speaker time is a particularly nice feature (and recent addition) for Otter.ai, one which provides useful information regarding who spoke more (or less) in your meeting/interview/etc.

Secondly, a word cloud can be automatically generated from the Otter.ai app. These provide a quick, easy, engaging visual of the key words in your recording. In the example in slide 8, you can quickly see that this webinar was about engagement, people, planning, communities, conversations, etc. - it was a webinar hosted by a company called Commonplace about community engagement in planning decisions.

|

| Slide 9 - three examples of key word generation |

Slide 9 shows three examples of the automatic generation of key words, which you can view as a list or a word cloud. You can quickly see that example 1 is about Barcelona, cities, people, streets, citizens, maps, etc. - this was an webinar by Livingmaps on participatory mapping in Barcelona. Example 2 is Commonplace's webinar on community engagement, and example 3 a webinar hosted by the GIScience research group about the exploration of landscapes. This is a great way for researchers to view meetings, interviews, webinars, etc and quickly offer a basic summary and highlight similarities/differences.

|

| Slide 10 - search in the text, embed photos |

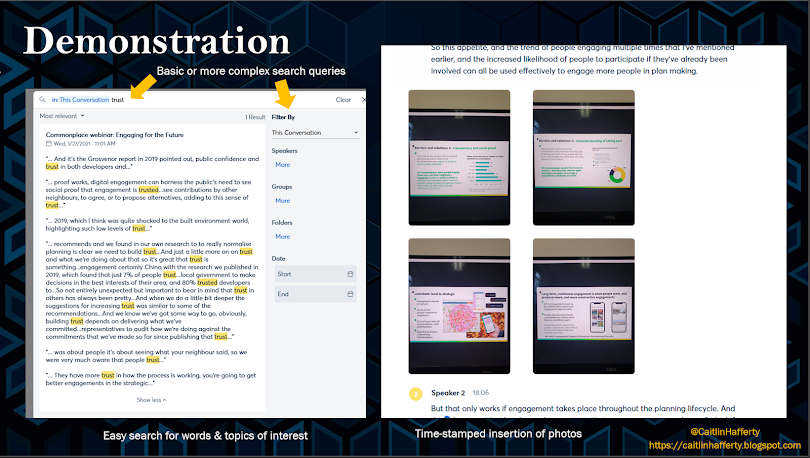

Slide 10 shows in more detail what a basic search query looks like in Otter.ai. In the image on the left hand side, I've searched for the word 'trust' in the transcript for a webinar on public engagement (trust is a key consideration for 'good' public engagement). This shows all the times that 'trust' (of variants of the word - trusts/trusted/trusting) is mentioned by speakers) is mentioned, which is a useful feature for digging into emerging themes in the text. The image on the right just shows how photos taken during the interview are embedded into the text - this is useful for showing slides, images, etc which were a visual stimuli for the topic of conversation.

I've written a third blog post which shares the final slides (11-15) exploring some key considerations regarding practical issues, ethics, and privacy/security/GDPR concerns. View it here: https://caitlinhafferty.blogspot.com/2021/03/automated-transcription-for-research3.html